This one is continuation from my last post where I had looked into various alternative options to traditional RDBMS Databases. In this post I will cover some of the basics and go over the factors that influence the choice of a datastore in general and NoSQL Databases in particular. I will also cover the trade offs associated with a choice of a datastore.

Fundamentally, a Database is a specialized software system that allows you to write/store ( i.e. create, update, delete), read, and even do some amount of processing of the data e.g. executing the aggregate functions.

In a world dominated by RDBMSs, Databases are expected to be ACID compliant, in fact, a must have & an important measure of Quality. This is the case with all the RDBMS and they have been doing that job fairly well for many decades. So, what changed recently? To the core, there are really few handful needs that became very important -

Document Databases do exceedingly well when it comes to supporting Flexible Data Structures. Column Family Databases also provide some amount of flexibility, each row can have a different set of attributes. However, in this post, without getting into further details on those factors, I will shift the focus on last two points and explore how various parameters really influence the choice.

So, how does anyone achieve High Availability (HA) for any system? By building redundancy into the system and databases are no exception, they create replicated failover nodes. Failover nodes are exact replicas of the master node and remains passive unless required. Usually, Databases ensure HA but the challenge of ensuring HA is different when it comes to distributed, partitioned databases. Second, it is one thing ensuring HA against a node or machine failure and it's entirely different thing when it comes to ensuring that at no point DB should be unavailable should there be a Network, Machine, Power or any other failure e.g. Data Center goes down. Typically it is achieved by putting the replicas across different Data Centers spread over different geographies and those replicas are not offline. This is also known as Geographically Distributed High Availability (GDHA). Thus Network Partition tolerance becomes critical. Not all databases support GDHA. Note GDHA is more than Disaster Recovery (DR) where in the replicated nodes remain offline and used only when any disaster hits the master node. Usually the focus of DR Systems is not limited to Databases, they kind of keep the entire stack ready.

Other big issue is really about Scalability. How much a database (read RDBMS) can grow? It can grow as much as the largest machine will allow it to grow. But what if you hit that ceiling too? Obvious answer would be to put a second machine. That's correct, but then can the Database still meet the important quality measure called ACID or can be made highly Available when some of the operations (i.e. reading, writing or processing of the Data) are happening in distributed systems? A simple answer is NO and that's the time you start looking into trade off matrix. You take a second look into the operations as discrete activities and take a call on what is critical for your business and what you can give up.

Before we go any further lets put the definition of ACID for reference:

Next, lets take a look at Replication i.e. copying the datastore to a different node. High Availability is achieved by replicating a database node. Replication comes in two forms:

While Database replication primarily helps to handle failover and ensures Higher Availability, it also helps Scalability. Master-Slave replication works well for Read Scalability while write operations can take place only on the Master node and Slaves then syncs up with the Master either synchronously or asynchronously. Peer-to-Peer or Master-Master replication helps achieve both Read and Write Scalability as both read and write operations can take place on all the replicas. Here, all the replicas will have the same copy of Database. This is traditionally known as Scaling Up or Vertical Scaling where a Database system can scale as much as a node can grow. This should work well for most of the systems. However, for infinite scale or web scale, one needs to go for Scale out or Horizontal Scaling where data is Partitioned or Sharded across multiple nodes. This allows Databases to grow infinitely just by adding new hardware (usually commodity hardware). Note each partitioned node will have different set of data and may have its own replicas for high availability, each partitioned node is actually a database in it's own capacity.

Now lets look at the trade off matrix I mentioned earlier in this post. This trade off matrix is known as CAP Theorem. This is also known as Brewer's theorem. It states that it is impossible for a distributed computer system to simultaneously provide all three of the following guarantees:

Finally, here is a matrix, I prepared, to capture various parameters that one would consider while analyzing a Distributed DB System.

Fundamentally, a Database is a specialized software system that allows you to write/store ( i.e. create, update, delete), read, and even do some amount of processing of the data e.g. executing the aggregate functions.

In a world dominated by RDBMSs, Databases are expected to be ACID compliant, in fact, a must have & an important measure of Quality. This is the case with all the RDBMS and they have been doing that job fairly well for many decades. So, what changed recently? To the core, there are really few handful needs that became very important -

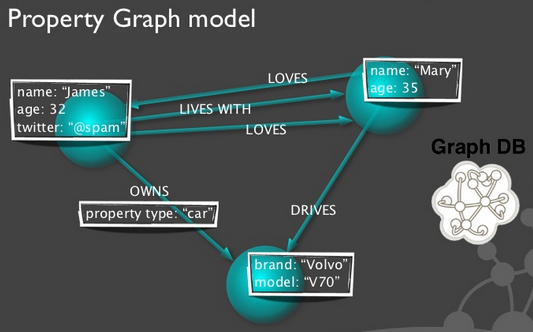

- Increased Complexity of relationship

- Need for Flexible Data Structure

- High Availability

- Scalability (typically referred as Web Scale)

Document Databases do exceedingly well when it comes to supporting Flexible Data Structures. Column Family Databases also provide some amount of flexibility, each row can have a different set of attributes. However, in this post, without getting into further details on those factors, I will shift the focus on last two points and explore how various parameters really influence the choice.

So, how does anyone achieve High Availability (HA) for any system? By building redundancy into the system and databases are no exception, they create replicated failover nodes. Failover nodes are exact replicas of the master node and remains passive unless required. Usually, Databases ensure HA but the challenge of ensuring HA is different when it comes to distributed, partitioned databases. Second, it is one thing ensuring HA against a node or machine failure and it's entirely different thing when it comes to ensuring that at no point DB should be unavailable should there be a Network, Machine, Power or any other failure e.g. Data Center goes down. Typically it is achieved by putting the replicas across different Data Centers spread over different geographies and those replicas are not offline. This is also known as Geographically Distributed High Availability (GDHA). Thus Network Partition tolerance becomes critical. Not all databases support GDHA. Note GDHA is more than Disaster Recovery (DR) where in the replicated nodes remain offline and used only when any disaster hits the master node. Usually the focus of DR Systems is not limited to Databases, they kind of keep the entire stack ready.

Other big issue is really about Scalability. How much a database (read RDBMS) can grow? It can grow as much as the largest machine will allow it to grow. But what if you hit that ceiling too? Obvious answer would be to put a second machine. That's correct, but then can the Database still meet the important quality measure called ACID or can be made highly Available when some of the operations (i.e. reading, writing or processing of the Data) are happening in distributed systems? A simple answer is NO and that's the time you start looking into trade off matrix. You take a second look into the operations as discrete activities and take a call on what is critical for your business and what you can give up.

Before we go any further lets put the definition of ACID for reference:

- Atomic: Atomicity refers to the ability of the database to guarantee that either all of the tasks of a transaction are performed or none of them are.

- Consistent: The consistency property ensures that the database remains in a consistent state before the start of the transaction and after the transaction is over (whether successful or not).

- Isolated: Isolation refers to the requirement that other operations/transactions cannot access or see the data in an intermediate state during a transaction.

- Durable: Durability refers to the guarantee that once the user has been notified of success, the transaction will persist, and not be undone.

- Lock or Exclusive Lock or Pessimistic Lock. Some databases allow only one user to modify a record, row or document at a time. Preventive.

- MVCC (multi-version concurrency control) or Optimistic Lock is a mechanism that guarantees consistent reading. It allows multiple users to modify a record with multiple conflicting versions without acquiring an exclusive lock. However, it puts a check when it comes to committing the changes into the database. At that point it allows a successful commit only for the first user to attempt.

Next, lets take a look at Replication i.e. copying the datastore to a different node. High Availability is achieved by replicating a database node. Replication comes in two forms:

- Master-slave replication makes one node the authoritative copy that handles writes while slaves synchronize with the master and may handle reads.

- Peer-to-peer/Master-Master replication allows writes to any node; the nodes coordinate to synchronize their copies of the data.

While Database replication primarily helps to handle failover and ensures Higher Availability, it also helps Scalability. Master-Slave replication works well for Read Scalability while write operations can take place only on the Master node and Slaves then syncs up with the Master either synchronously or asynchronously. Peer-to-Peer or Master-Master replication helps achieve both Read and Write Scalability as both read and write operations can take place on all the replicas. Here, all the replicas will have the same copy of Database. This is traditionally known as Scaling Up or Vertical Scaling where a Database system can scale as much as a node can grow. This should work well for most of the systems. However, for infinite scale or web scale, one needs to go for Scale out or Horizontal Scaling where data is Partitioned or Sharded across multiple nodes. This allows Databases to grow infinitely just by adding new hardware (usually commodity hardware). Note each partitioned node will have different set of data and may have its own replicas for high availability, each partitioned node is actually a database in it's own capacity.

|

| Scale Up vs Scale Out |

Now lets look at the trade off matrix I mentioned earlier in this post. This trade off matrix is known as CAP Theorem. This is also known as Brewer's theorem. It states that it is impossible for a distributed computer system to simultaneously provide all three of the following guarantees:

- Consistency (all nodes see the same data at the same time). Note this consistency is different than what it is in ACID.

- Availability (a guarantee that every request receives a response about whether it was successful or failed)

- Partition tolerance (the system continues to operate despite arbitrary message loss or failure of part of the system)

Here is a nice summary of how different Datastores complies with CAP Theorem from a presentation by Aleksandar Bradic

Finally, here is a matrix, I prepared, to capture various parameters that one would consider while analyzing a Distributed DB System.

Not all values are filled. I will continue to work on this and update it further.

Connect to me on twitter @satya_paul

Check out my storyboard on www.fanffair.com - http://www.fanffair.com/storyboard/satyajitp2011